We wish to explore the design of tools to give people access to the results of individual decisions, the pertinent context and the overall relationship between the goals of the system and its operational outcomes. In this manner we seek to create a pragmatic, principled and comprehensible explanation of an AI-based decision making system.

Recent debate in academia, industry and public settings has given voice to a battery of concerns as to the methods and consequences Big Data and AI based systems the decisions of which are increasingly having significant impact upon the public. This has occurred against a background in which major innovation in the regulation of the ways in which public and private bodies use data about individuals has created widespread interest in the issue of individual privacy in the context of rapidly developing digital technologies. Fierce debates in public life over the nature of bias in machine reasoning are creating a rapidly changing public perception against which technology based innovations in sectors such as Accountancy, the Law and Financial services will be assessed by the public. Initially individual public commentators and academic researchers have driven the debate but now activist groups, public bodies and legislators are joining in. The parameters for what is deemed by the public as acceptable, let alone desirable in term of automated decision making, driven by whatever method or technology are rapidly evolving. This context of increasing and visible concern for the implications and consequences of what were until recently technical design decisions must have an impact upon any actor dealing with the general public when deploying tools and practices with embedded algorithmic decision making.

At present the industry and many academics are focussed primarily upon the technical questions surrounding the deployment of what have come to be called ‘black box’ applications and the requirement to increase or indeed create some transparency around their decisions. This problem will be hard, indeed very hard to address. Not least because the current generation of predictive technologies are characterised by the degree to which their inner workings are opaque by design, to the technical expert, let alone the layperson. This situation has variously been described as a threat to the industry, setting a limit on or preventing deployments in some settings or an intractable technical challenge.

It has been contended by legal scholars that the right contained within the GDPR regulation to “meaningful information about the logic involved” provides for a more general form of oversight, rather than a right to an explanation of a particular decision” [italics original]. The information should consist of “simple ways to tell the data subject about the rationale behind, or the criteria relied on in reaching the decision, without necessarily always attempting a complex explanation of the algorithms used or disclosure of the full algorithm.”

The example given of “meaningful information” at p 14 restricts itself to suggesting that the system should provide (i) input information provided by the data subject, (ii) relevant information provided by others (e.g. credit history) and (iii) relevant public information used in the decision (e.g. public records of fraud). Which example suggests that no information about the “innards” of the decision-making process— that is anything that seeks to decompose the decision making algorithm need be given. In effect individuals who feel themselves to have been the subject of unfair decision making practices or their consequences will have very little leverage with which to challenge the decisions and the consequences they have been subject to.

Our response to these and other problems, both practical and ethical besetting AI and other applications is to propose that what is required is for technologists and businesses alike to reframe the problem. We suggest that a switch of emphasis from attempts to explain the inner workings of the black box, the how of decision(s) if you will. To the creation of systems that can intrinsically offer a useful and usable explanation of what it is they are trying to achieve. To give users and other actors affected by the system, a comprehensive description of the goals of the system and its impacts. The emphasis is placed upon users being shown a plain english description of the goals of the exercise and this is allied with a usable interface to describe the implications of its decisions for the individual user or customer.

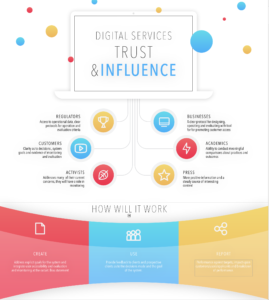

We think the time has arrived when the accumulated evidence of the different sources of bias in systems, whether derived from the software tools, human operators or the interaction of the two (Chouldechova, 2017) can be used to describe a process where the ethical and technical issues can be addressed in a structured way to both address concerns and create a better system for operators and the public alike. At the outset it is important to recognise the importance of gaining input from and working with all parties affected by the development of a system.

We envisage an hierarchy of access, first and foremost for the individual consumer so that they can see the nature and impact of the decision for themselves and to place this in the context of data from other people with similar requirements and circumstances.

For public services the data regarding system outputs would be publicly available at useful aggregations for individual clients, the wider public, regulators and operators. In commercial settings respecting commercial confidence but again open to individual customers, regulators and operators in different degrees.

It is most important to include in this dynamic, evidence as to the nature of the review and evaluation data collected by the operator and the transparency of their effort to apply that knowledge to the operation of the system. It is key to the task of creating and maintaining the credibility of systems that the owner and operator is transparent about the means and methods to be deployed to monitor the operation of the system and intervene where and when it becomes necessary. Clearly some applications, such as the use of facial recognition technology by civilian police, or cancer screening services operated by the NHS will require more clarity about this process than some commercial applications. It is obvious however that commercial confidentiality will not suffice as an explanation or reason for failing to exercise due diligence where customers can act in their own best interests in the face of failings by operators.

We wish to explore the design of tools to give customers and operators access to the results of individual decisions, the local and pertinent context and the overall relationship between the goals of the system and its operational outcomes. In this manner we seek to create a pragmatic and principled accessible, and comprehensible explanation of an ai based decision making system.

Four design principles:

- A system has to be explicit in its everyday operation as to who will benefit from the application and who will be the (data) subject(s) of the system and inform both about each other.

- Those who claim benefit will have to design a system that can demonstrate its aims and objectives to the data subject(s) as well as shareholders and stakeholders.

- Any system must be designed into existence such that its continuing capacity to deliver the aims and objectives can be reviewed by all parties – see (1) above, providing evidence that benefit(s) are real and continuing.

- Any system that creates someone/group of people as data subjects must include said people or groups in designing both the aims and objectives and the system itself.