We demonstrate the cruelty of current computational reasoning artefacts when applied to decision making in human social system. We see this unintended cruelty not as a direct expression of the values of those creating or using algorithms, but a certain consequence nevertheless.

Below is a transcript of a paper Alex gave at the EVA Conference at Aalborg University in Copenhagen in May 2018. It was the product of the last 24 months of our work with Algorithmic Decision Making. We have both designed and built machine learning algorithms and performed research into the effects and outcomes of their use. The paper was an exploration of some of the extremes of what we have experienced. The subject of the conference was “Politics and the Machines” and our colleagues were a fantastic mix of artists and academics.

Abstract

We seek to demonstrate the cruelty of current computational reasoning artefacts when applied to decision making in human social systems. We see this cruelty as unintended and not a direct expression of the motives and values of those creating or using algorithms. But, in our view, a certain consequence nevertheless. Secondly we seek to identify the key aspects of some examples of AI products and services that demonstrate these properties and consequences and relate these to the form of reasoning that they embody. Third we show how the reasoning strategies developed and now increasingly deployed by computer and data science have necessary, special and damaging qualities in the social world. Briefly noting how the narrative underpinning the creation and use of AI and other tools provides them with power in neoliberal economies. Placing a dis-empowered data ‘subject’ in an inferior, economically and politically supine position from which they must defend themselves if they so chose. Finally we explore the issues that arise if it were possible to imagine and indeed ‘do’ work that whilst deploying these techniques does not necessarily reflect or help to create the impoverished notion of human thinking embodied in current AI, or what we call pseudo-cognitive products. We offer some ‘found’ artefacts which we believe might be considered art in this context.

Alexander Hogan

Christian Tilt

Kevin Hogan

ETIC Lab

May 2018

Notes on the Law of Unintended Barbarity (LoUB)

At the same time as algorithms are increasingly used to study, monitor and police humans and human behaviour, as well as capital and commercial information, so too have they increasingly played a role in not just supporting decision making about critical and often intimate moments of people’s lives but in actually making the decision.

In being recruited to the positions of power in complex social settings, we observe that they produce consequences, both intended and unintended, which are cruel or worse. This outcome, we argue, is not an accident, the product of poor design, or malice. Our position on the consequences is two-fold. 1) That the unfortunate and unintended consequences will not be prevented. (What we have termed the ‘Law of Unintended Barbarity’) 2) That the use of algorithmic based decision making needs to be better understood – what problems are chosen, by whom and for what purpose – and who it is exactly that will suffer as a result.

Our paper is part of an effort to create a new means to ‘understand’ the nature the black box algorithmic technology and to locate it, to visualise if you will, in its context as a demeaning, individualising, post-human technology. We suggest that this is not a necessary consequence of applying these technologies but neither is it a choice given that we none of us have an informed understanding of what it is we are doing.

Before we elaborate on our two positions, we would like to illustrate our paper with several short examples of algorithmic technology and some ‘found art’ in the shape of artefacts produced to enable the application of particularly insidious algorithmic decision making.

Look out for the top-down nature of the effects, the asymmetric application of political and economic power and the entirely opaque nature of these systems for both employees and customers and clients. Our list is necessarily brief – in the world at large there are many, many more – and hundreds in development as we speak.

Finally, in the last third of our paper we set out our hope that Art can be a medium whereby we can ‘open’ and visualise what algorithmic reasoning is, what it is doing to us and what it should or should not be in our society.

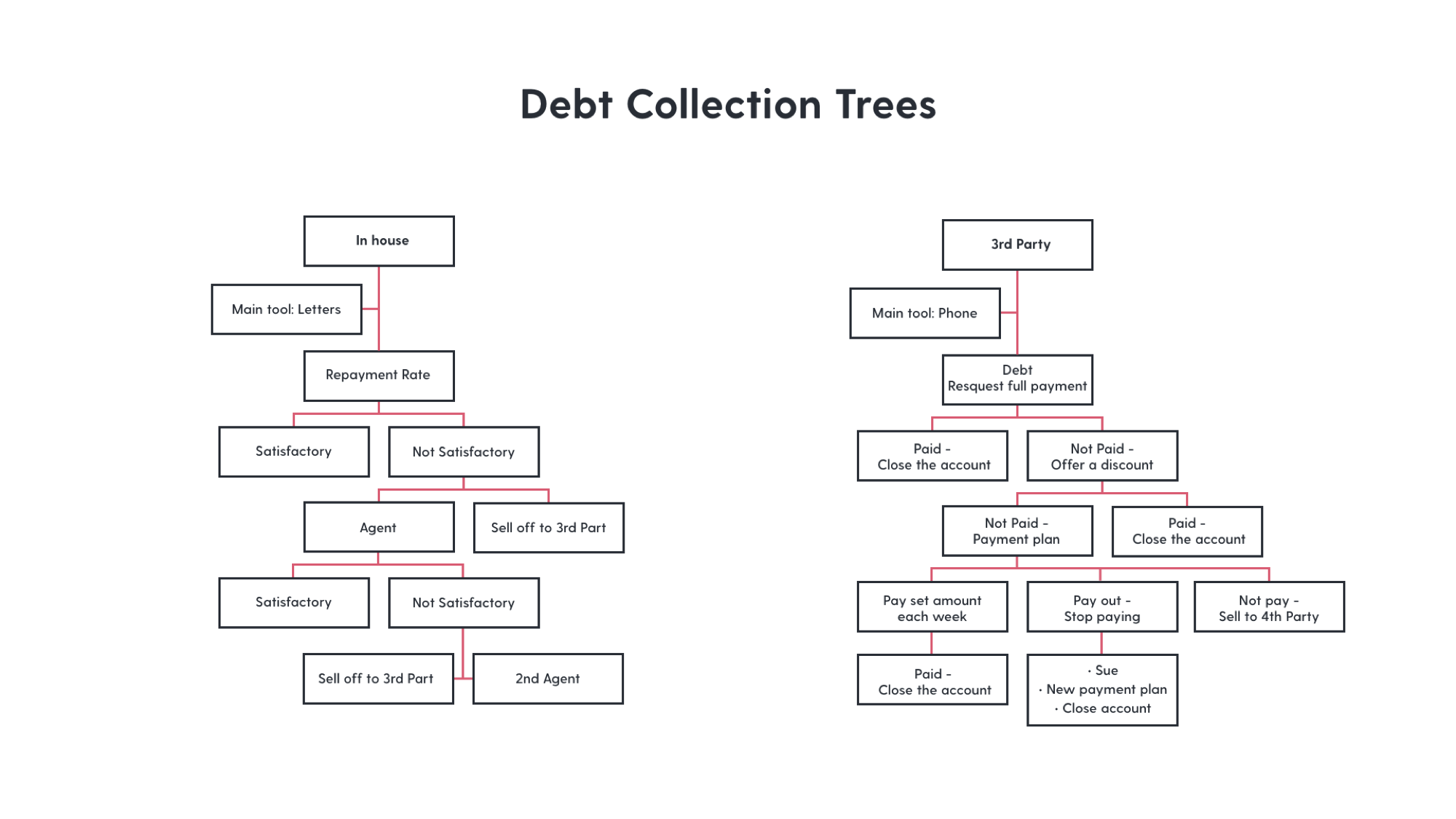

Debt Management Plans

Just in case you think of us as armchair critics, our first example comes from some of our commercial work. We were recently employed to produce decision support applications for a large firm in the financial sector. We learned a lot from having to explicitly understand the relationship between personal indebtedness and algorithmic reasoning. Our work brought us into contact with a large data set comprising people struggling with personal debts; loans, overdrafts, store cards, unpaid bills etc.

On investigating we found, counter to our initial expectations, that this data did not reflect the UK’s national picture for the distribution of debt. There weren’t more women than men as clients (despite there being far more women in significant debt in the general population), young people were under-represented in the sample and it most important, it was the profile of the creditors to whom the debt was owed – and not the amount of debt – that predicted the individual’s ultimately presenting themselves as potential customers in order to ‘deal with’ their distress.

We realised that what we were looking at was a client base composed almost entirely (10’s of thousands) of people who had sought the company’s services because of the strategies utilised by their various creditors (and agents who had subsequently taken ownership of their debt) to retrieve money. What we found was that the initial owner of the debt had applied an algorithm to decide to sell that debt on to a collection agency and the debt agency had subsequently applied algorithmic reasoning to decide on how to follow up their ‘customer’. This involved seeking court judgements, appointing bailiffs and other tactics including selling on the debt themselves. Only at this point were customers of our client coming forward to ask for (paid) help in constructing a debt management plan.

Just to take a moment here – think about it. For a customer base of 10s of thousands of people what predicted their financial struggles was not how much they owed but who they owed it to.

So homogenous was the data, with respect to creditor profile, that it quickly became obvious that we were not the first to have algorithmically processed these people (or rather their data) and likely they were a hyper-selected population. They were the product of repeated financial decision making machines ruling them unfit for further profit and thus handing them off to the next rung on the ladder for ‘squeezing’ with increasingly punitive measures. Processing that almost certainly created the conditions (or at least exacerbated them) which contributed to their next algorithmic categorisation.

Health Insurance

Virginia Eubanks in her book “Automating Inequality” describes the difficulties encountered by her and her partner after being struck with intense healthcare needs only days after changing jobs and hence health insurance providers in the US. She ran afoul of an algorithm designed to look for fraudulent behaviour in customers.

In making a significant claim so soon after starting on a plan, for her partner and in need of significant pain medication known to be abused, she set several red flags in the system and set in motion a terrible rigmarole, entirely unsuspecting at first.

Not only was she flagged as a suspect and faced with multiple frightening and expensive consequences – removal of coverage at a critical moment – but the systems she encountered for administering her health insurance and health-care subsequently escalated and persisted with the ramifications of her misclassification, even after she had “corrected” the initial mistake. No ‘entry point’ existed for her to put right what should never have gone wrong and the further she went from the original error, encountering different platforms for acquiring and paying for health-care, talking to different representatives of various services etc. the greater the difficulty in catching up with the ramifications of the initial mis-classification.

As she herself observes, she was greatly helped by her experience, education and support networks in overcoming this nightmare at what was already a moment of great stress. Had she not been in possession of these things (as many people are not) her experience could have been so much worse.

Teachers

A serious example of the Law of Unintended Barbarity (LoUB) was explored in detail by Cathy O’Neil in Weapons of Math Destruction. An algorithm designed for quantifying the effectiveness of teachers in a Virginia school district was deployed as the basis for firing a fixed percentage of low performing teachers annually. Designed to force teachers to improve their performance and claw their way into the appropriate percentile and save their jobs.

The algorithm designed was based mostly on exam results – an easily obtainable and useable metric – ignoring any duties and responsibilities a teacher may have undertaken that could not be cheaply and reliably measured. As a result, teachers with excellent peer reviews and many years experience found themselves unemployed. On investigating their situation, evidence was found that they had taken on classes who’s grades likely been inflated in previous years (perhaps on behalf of less scrupulous teachers gaming the system as they knew how the algorithm was likely to work). It is also easy to imagine a situation where a student in this district (yet again poor) in having to deal with all the other stressors of life they encounter, did not place particular importance on end of year exams, especially relative to their more privileged peers.

One unintended consequence was that many of the sacked teachers were able to find new jobs, over the border in Virginia in a more prosperous school district with no such algorithmic regime. In short good teachers were lost and the community as a whole demoralised.

Grandma

My own recent experience of the LoUB came 3 days after my Grandmother’s death. She had been placed in a home administered by a large company and had been flagged as requiring special treatment, her care was partly paid for by the state and she was terminally ill. As such, their invoicing system sent us our first – and only – bill, charging us for a full month of care, 24 hours after grandma died and less than week into her stay. What was also notable about this experience was the conversation I had with the member of staff I contacted to correct the invoice, who noted that we were not the first to have experienced this and yes, wasn’t it terrible that the system threw things like this up?

“Justice”

Our final example(s) are from the many applications of algorithmic toolsets to criminal justice systems. There are many cases to highlight but we choose:

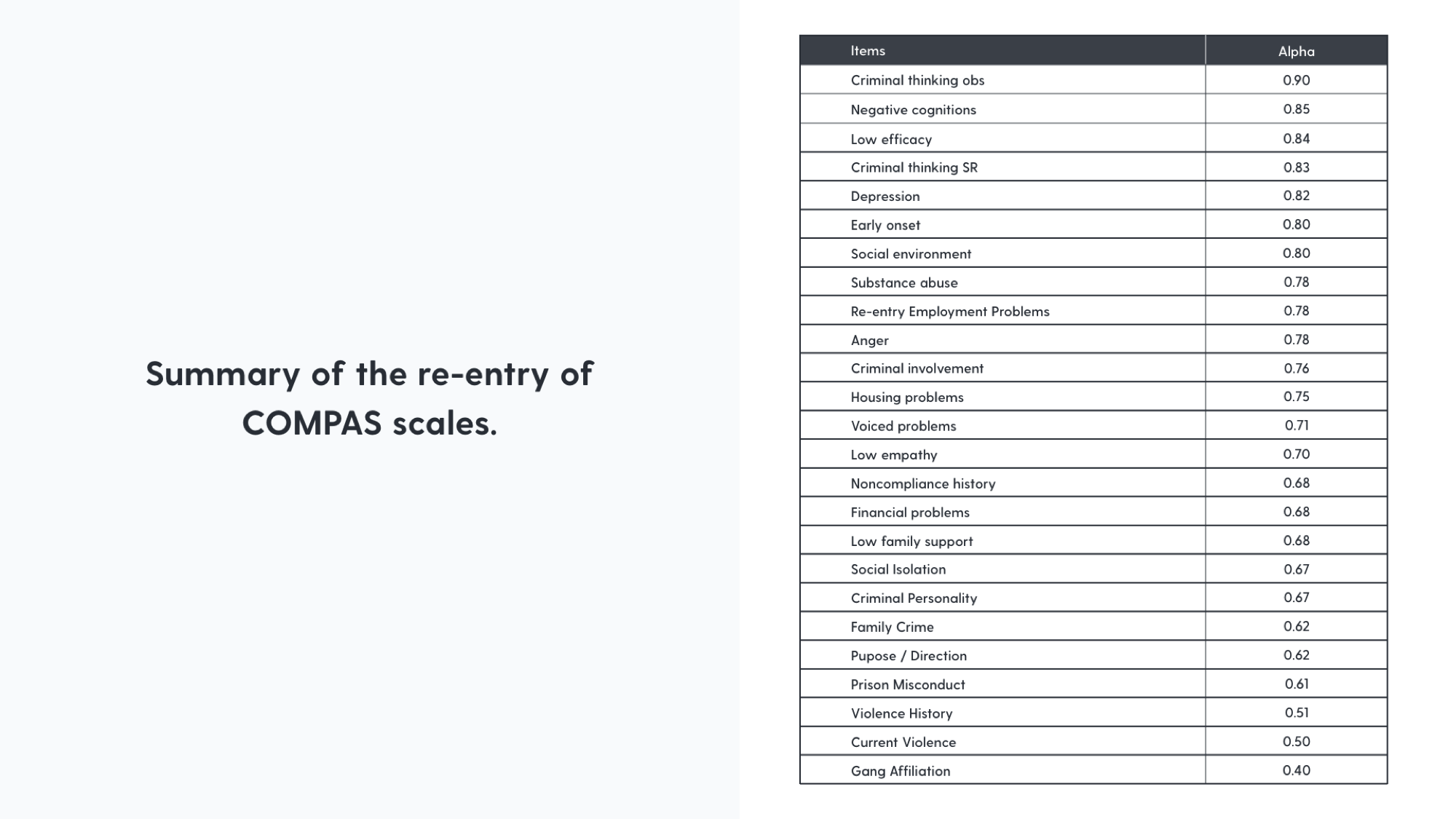

COMPAS

Correctional Offender Management Profiling for Alternative Sanctions

“In overloaded and crowded criminal justice systems, brevity, efficiency, ease of administration and clear organization of key risk/needs data are critical.” (Northpointe, 2012)

HART

Durham Constabulary’s Harm Assessment Risk Tool

“As is common across the public sector, the UK police service is under pressure to do more with less, to target resources more efficiently and take steps to identify threats proactively” (HART review, 2017)

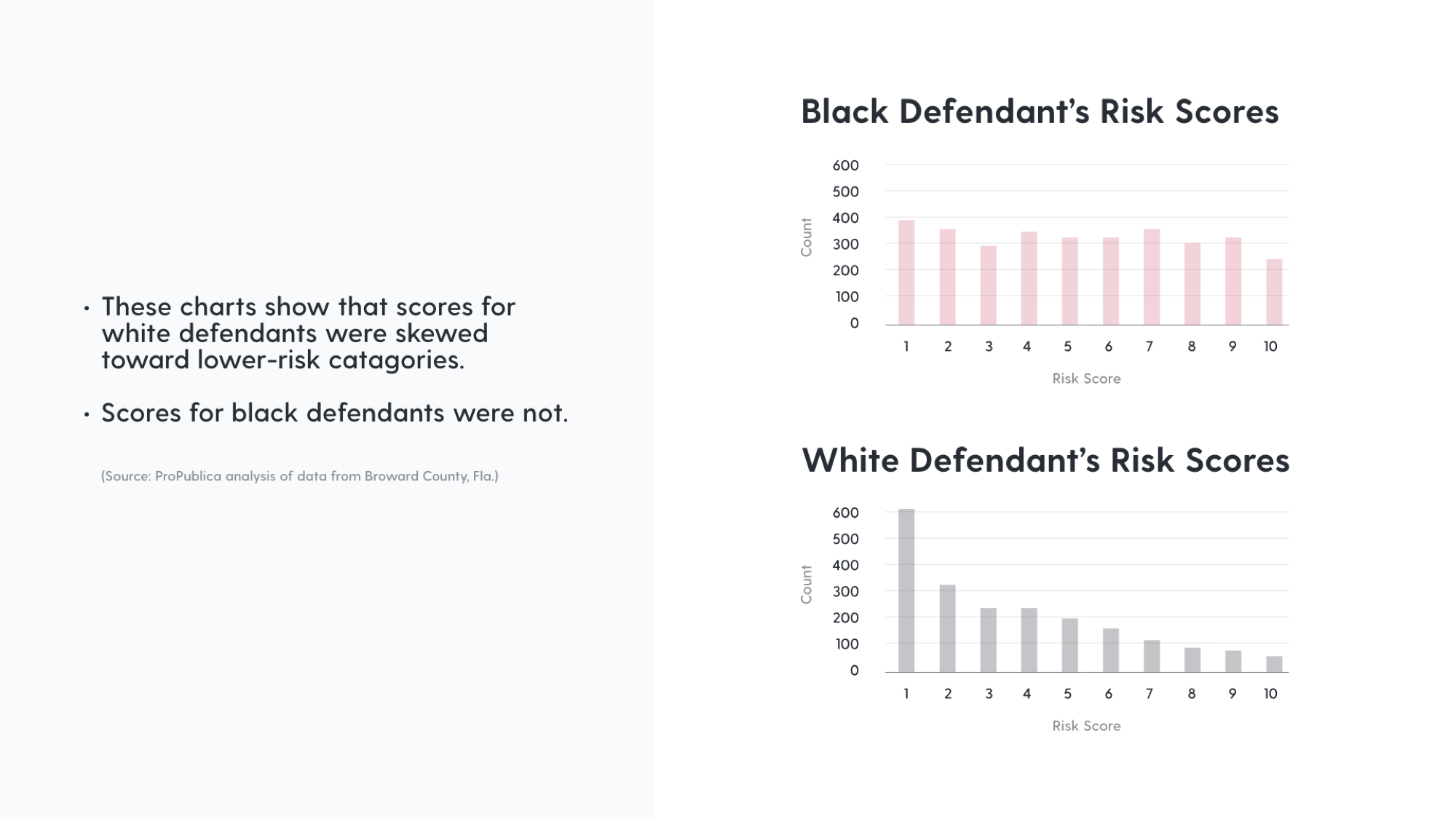

Two of several software systems that are used in support of sentencing thanks to their apparent ability to predict risk of recidivism amongst defendants.

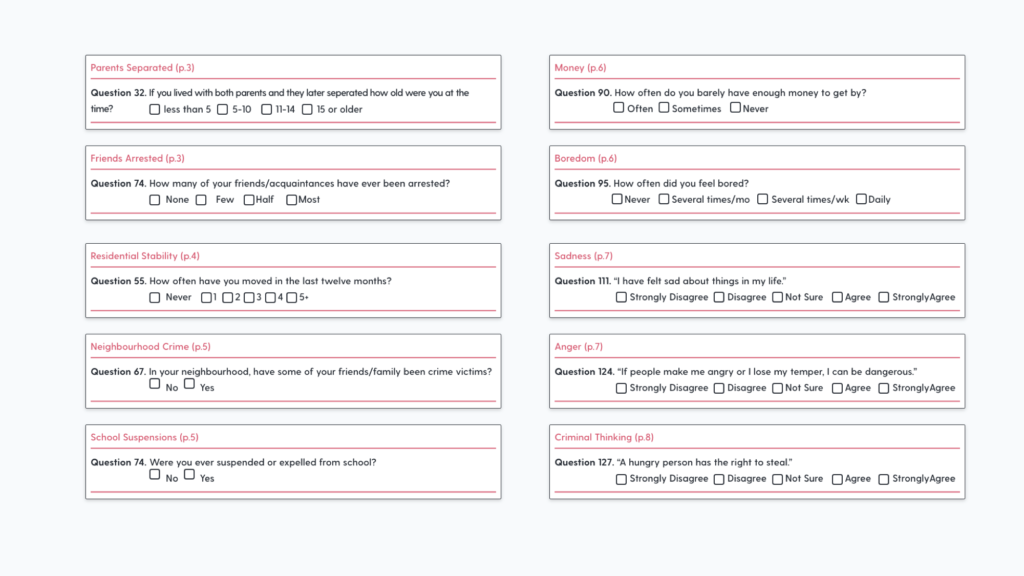

As you can see from the slide – feeling negative, depressed, with low self-efficacy, a drug user, unemployed, young, angry and from a poor environment is predictive. You will be treated more severely by the system as a result.

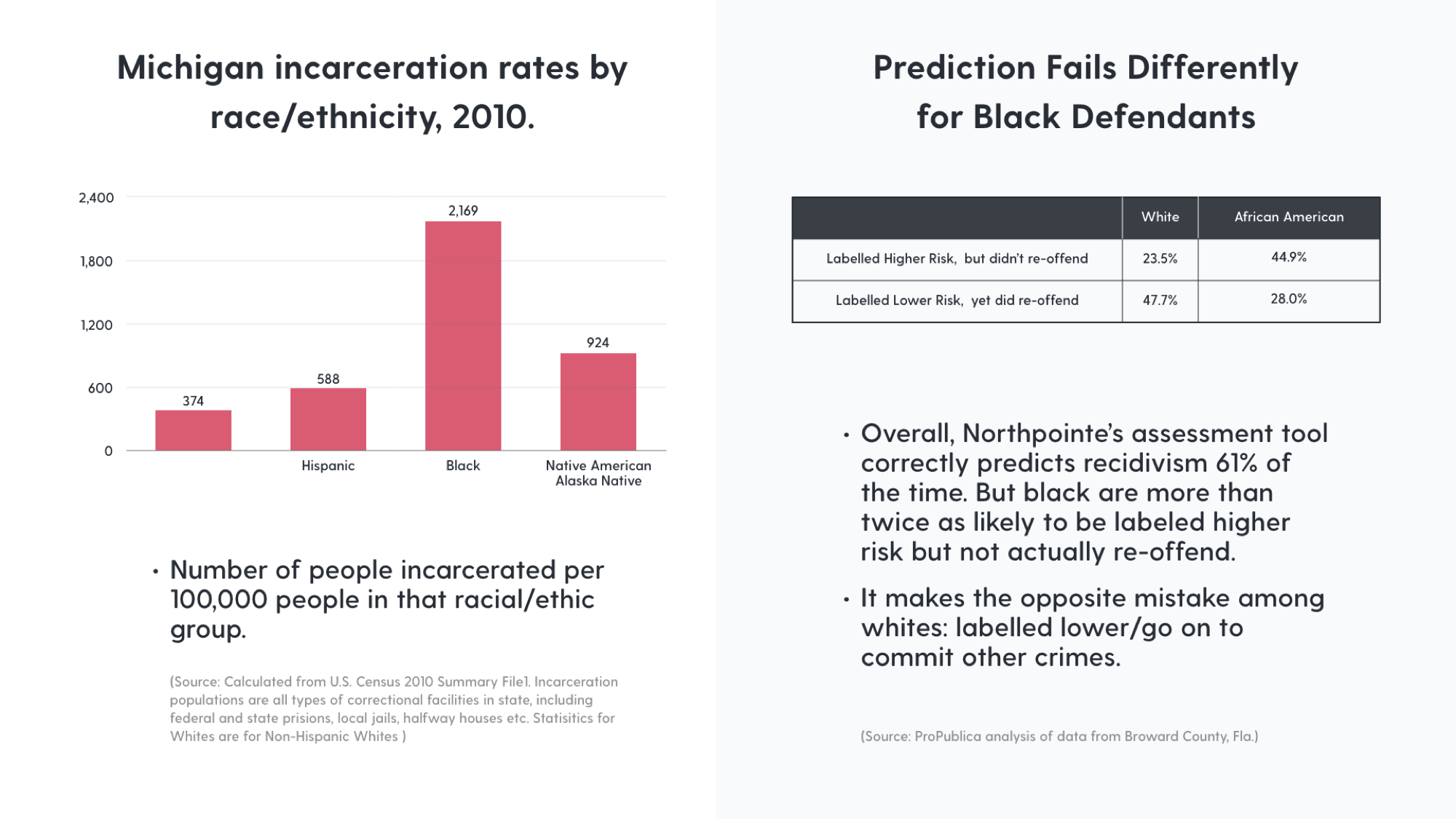

Perhaps most significant is the fact that some of the published research on the use of COMPAS; the validation and re-validation studies conducted in 2010 and 2012 in Michigan, never mentioned race, black or ethnic minorities – not once. Which is especially important given this picture of Michigans’ prison population at that time.

These data are standardised showing the number of people incarcerated per 100,000 citizens of Michigan in each ethnic group. Given that black Americans are incarcerated at a rate 5.8 times that of white American citizens, the algorithm is an example of Colour blind racism at a state level. The algorithm is both the agent of oppression and the excuse for it.

In Durham, the goal is to provide a more efficient use of police resources with more consistent and evidence based decision-making. Offenders are offered access to Checkpoint – help with drug or alcohol abuse, homelessness or mental health if they are judged a ‘moderate’ risk of reoffending by the HART algorithm. People who are classed as having a “moderate” chance of committing another crime can be offered inclusion in the Checkpoint programme. If they’re judged to be a high or low risk, they cannot be included. Not only are those judged at high risk of re-offending not offered such access to resources they will receive sanctions instead.

This system is now operational but has been subject to critique and the status of postcodes as predictors – poor people from poor areas need to be judged as a higher risk – is under review.

What Could Will Go Wrong?

It is our contention that most of the cruelty we have spoken about here is unintended, an unfortunate effect of the employment of algorithmic decision making.

For example, although it has been designed to help with fair sentencing – the algorithm is loaded with cultural and intellectual values posing as reasonable assumptions about ‘justice’.

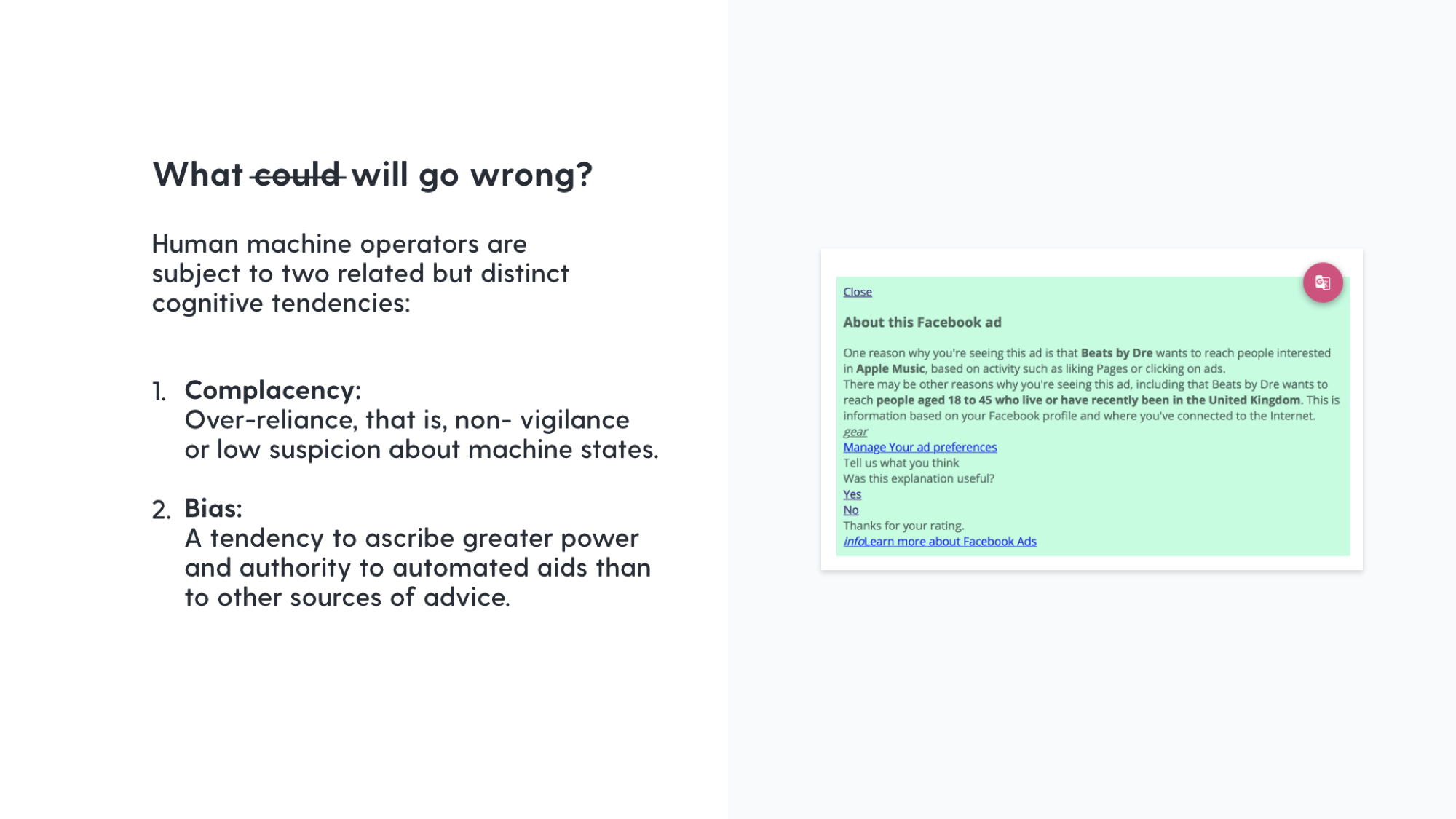

There are three empirically observable and very robust human behaviours which we have observed having significant impact on development and application of algorithms, particularly in social settings.

These related but distinct cognitive tendencies are:

- Complacency (or over-reliance, that is, non- vigilance or low suspicion about machine states)

- Bias (“tendency to ascribe greater power and authority to automated aids than to other sources of advice”)

- Bounded Rationality – limits to our abilities as decision makers in formulating complex problems and processing information.

Bias represents a robust phenomenon that:

- can be found in many different settings,

- occurs in both naïve and expert participants,

- seems to depend on the [level of automation] and the overall reliability of an aid [high reliability worsens human performance]

- cannot be prevented by training or explicit instructions to verify the recommendations of an aid

- seems to depend on how accountable users of an aid perceive themselves for overall performance

We are building artificially bounded systems – they dont have all the information they need, nor all the possible cost assumptions built in to them. The problems they are employed to solve – or the people they are to police – are the problems identified by the actors involved using their understanding of the world.

Ultimately, the person who has paid for the AI might well be happy with the output they are getting but the other people – victims – are surrendered to the internal logic of the system in a way that it cant possibly work for them.

Markus (2017) describes how automated decision aids contributed to the destruction of lending market in the US and the crash of 2007. What happened was the algorithm “became a tool for use by novices with very different capabilities and motivations. In addition, the participation of humans in the control loop diminished sharply as incentives, production pressures, and cognitive biases discouraged human supervision of, and intervention in, automated decisions.”

Complacency and bias in using these tool sets seem to be unavoidable. Police will defer to machines, lawyers will defer, judges will defer to them. In trying to extract the most efficient use of the public purse – that itself being an ideological position – school administrators will encode and then use tools which will then tell them to get rid of their teachers. We all know at least some of what went on in the build up to and melt down of the financial crash. Well, not only are the tools that helped deliver it still being used, but the people using them are more reliant on their guidance than ever.

A Rigorous Calculus of Bigotry and Spite

The Law of Unintended Barbarity Part Two

Art – ‘a sensible form of data that suggests a way to experience and construct our reality’ Maet (2013)

We have been upset by our own work, finding ourselves needing to understand what we found out about our practices and assumptions.

We do not write from the point of view of critics ‘outside’ the system nor do we claim to be more knowledgable than our peers. When we began to look at the people in our data we realised we were looking at the results of many, many occasions when they had already been sorted – as victims – by algorithms in the employ of powerful organisations.

At the point we learned that – we were probably the only people in Europe who knew this. Certainly our employers didn’t, their clients didn’t know it, their clients creditors didn’t.

What means exist – outside of politics and technology, not reliant on the public or on policy – to survive a world where algorithmic decision practises are ubiquitous?

Our hope by presenting here and in dialogue with our colleagues is to find in Art the medium by which we can change our (that is all of us) relationships with the algorithms with which people seek to manage us. We must always remember that somebody owns and deploys these tools and in some way we feel that they should be held to account and should be asked to hold themselves to account.

We are no trying to invoke art as a way of ‘fighting’ this but of having a different relationship with the machine. Not as subjects, not as dependants but as something else.

What is needed, urgently, is a change of perspective in these debates away from neo-liberal political ‘reality’ of legal arguments, debates about technologies and the race to create ever more regulated and individualised societies. Art sometimes operates in a space which gives it great power to engage on its own terms with problems at this scale. Technology cannot and political action will not effectively engage with these issues. Not least because they are very much a part of the problem.

What follows are some accounts of our experimentation with other ways of using automation and algorithmic practise.

The Tenants Union

In Britain, if you are trying to find somewhere to live you are likely to find your income, relationship status, work history, criminal history and family scrutinised, often of course by algorithms.

Since 2004 the British Government has introduced multiple regulations for the private rented sector. Typically this is legislation designed to harmonise the environment for landlords. One significant example is the requirement that all private deposits be received by one of three third-party protection schemes, ostensibly in place for dispute resolution and to ensure that the deposit is untouched by landlords until required under terms of leaving a contract. In practice these schemes have links to landlord associations and are opaque to renters and very lenient on landlords who enter inaccurate data in such a way as they may, possibly be able to benefit from later.

The act that introduced these schemes also encoded a penalty for the landlord, amounting to up to 3x the original deposit, for failure to comply with the requirement to so secure the money handed over.

An unforeseen consequence of the legislation seems to have been therefore the opportunity to algorithmically generate a potentially lucrative activity in operating on behalf of mistreated tenants in reclaiming ‘misplaced’ deposit money – and more.

As part of a project we have been involved in we have developed not just a way of picking apart legislation such that a person can interact with it, to their own benefit, without requiring expensive legal knowledge, but also a way of presenting this knowledge and options for using it so as to complement mass actions based on confirmed or predicted infringements of their rights as tenants. For example, take photos of your room and see if its size falls within the legal requirement for your property, then find out if people nearby have reported similar conditions. Ultimately the project would like to support a user all the way to joining with others and taking steps to be recompensed and – crucially – create substantial pressure to change things by changing the material conditions a property owner operates in.

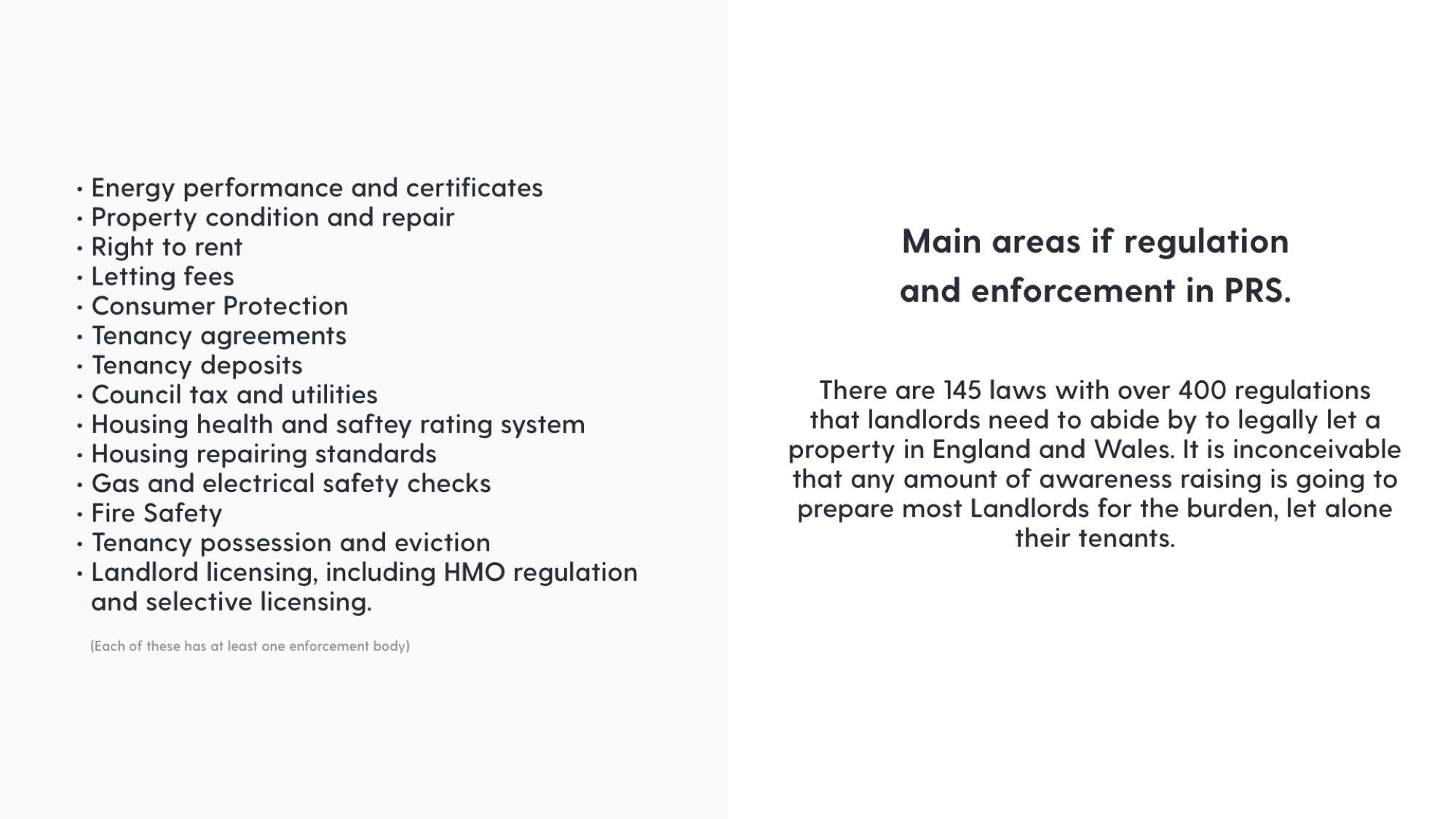

Further more – as can bee seen in the example above – there are dozens more areas of regulation for the Private Rented Sector and dozens of regulatory bodies in place to act as arbiter of disputes. With access to datasets such as the (open) Houses of Multiple Occupancy Licenses Register we have demonstrated that it is possible to ‘mine’ for potential infringements and empower citizens to make use of legislation they have possibly not even heard of before to invert the typical power distribution in which algorithms are typically in service.

Future

We dream of more projects like this. We would like to see front and centre, repeated efforts to foreground how algorithms are shaping our lives in cruel ways and opportunities for inverting it so that it can’t be ignored.

More and more we encounter artefacts of algorithmic reasoning that invite a playful response to what would otherwise be a brutish situation. One of us once had the experience of being a part of the UK’s unemployment system in the early days of computerised supervision. A relatively simple system based around expected answers to a series of digital forms and behaviours it proved easy to automate and thus to ensure various algorithmically generated hoops were jumped through in perpetuity. One unintended side-effect an Unintended Benevolance if you will, was the kudos and respect offered by an otherwise cold system that so many rules and expectations were being met. One can only speculate at the possibilities of mass adoption of such a software.

We invite our audience today to help us map out the interfaces and consequences of algorithmically supported decisions and how they deal with us. We invite you to help us engage with these entities/objects/articles and engage with them to create something else – an Art which forces the software/algorithm/rules to transcend their often banal and cruel roles.