This talk – a midpoint summary of the Routes to Justice Project – was given at the Tech for Justice Cymru event organised by the Speakeasy Law Centre and The Legal Education Foundation on Tuesday 3rd December 2019. Many thanks to them for inviting us.

Etic Lab LLP has been running a research project co-funded by Etic Lab and Innovate UK alongside our partners; RCJ & Islington Citizens Advice, LawWorks and the PSU, for almost a year. This presentation is the first public account of what we have been doing from the respective of technological developments arising from our work, it does not cover our findings to any significant degree, we will be publishing a detailed account of our method and findings in March 2020.

The purpose of the project was to determine the feasibility of applying AI and Big Data technologies within the Access to Justice sector and to identify candidate solutions or models. Because we were focussed upon determining the scope for successful interventions and not addressing any specific tool or problem we used soft systems methodology to structure our work.

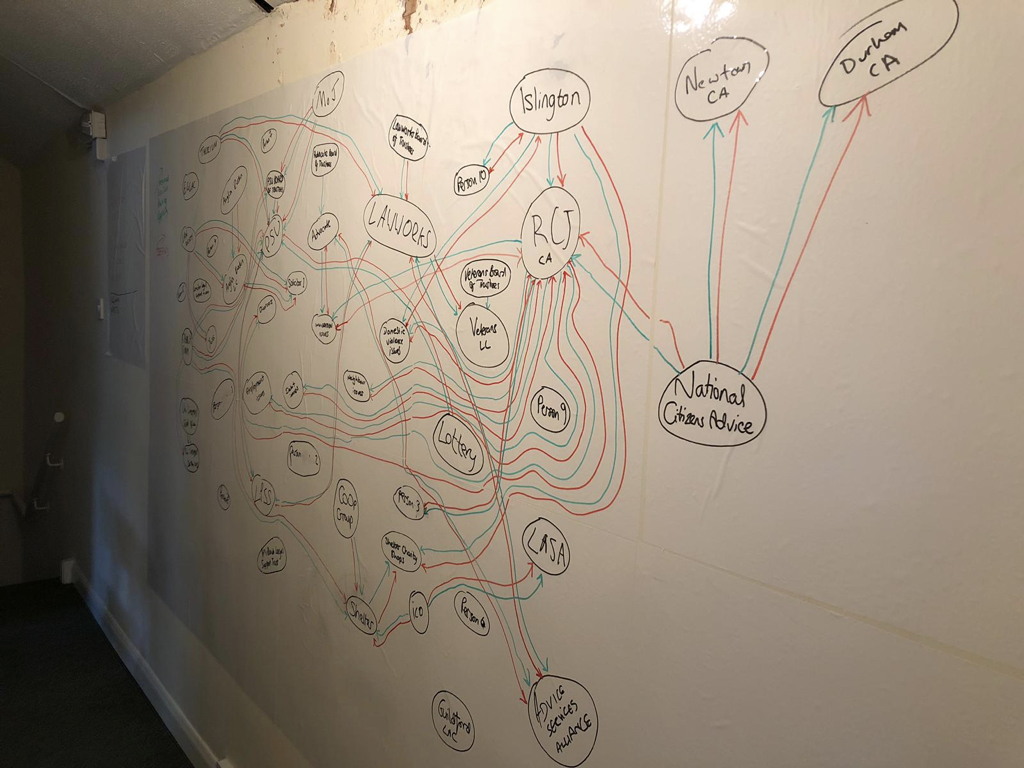

Our project was designed and funded on the explicit premise that we are examining an ecosystem, a complex of interacting actors with multiple interdependencies built into the networks both formal and informal. We note that this is the case even if many aspects of these networks are not always recognised by those agencies which make up the justice ecosystem. The concept of an ecosystem refers to a complex system of interdependent subsystems coevolving and adapting to dynamic changes across both internal and external environments. It does not necessarily imply that such behaviour is the result of self-aware calculation.

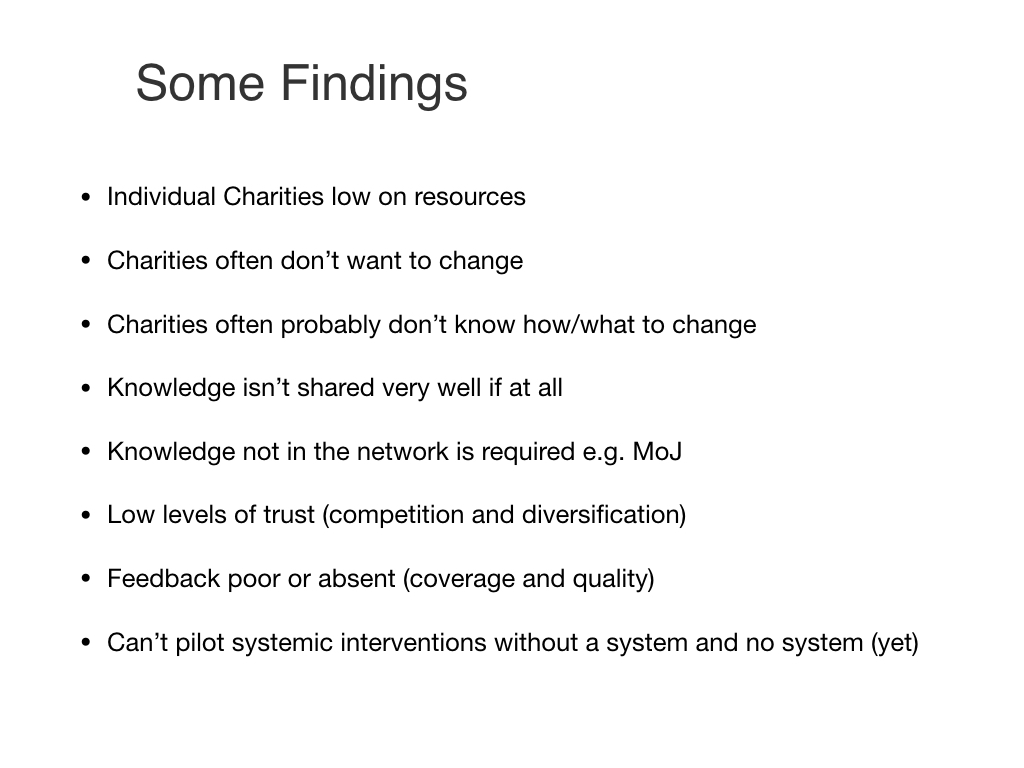

It was our goal to understand the ecosystem in order that any interventions we examined could have significant even systemic impact on the sector as whole. Prior to the project we had already noted how many technology innovations in the sector failed to scale and indeed frequently did not have a sustainable future. Given the cost of AI based interventions the decided it was not acceptable to ignore these issues and just build some tool or process for the sake of it and because we could.

Our work began by designing interview schedules and conducting field interviews with significant agencies and actors. Using snowballing our range of respondents grew quickly and we were fortunate in being able to speak to executives, managers, people in the front-line of delivery in agencies big and small. In parallel with this work we examined the academic literature, the publications of all the significant agencies and actors and reviewed the full range of Law Tech products and services in the rapidly developing market.

Our next task was to build an Ontology, a key tool for helping us understand where in the ecosystem we might intervene and what properties such an intervention would need to have in order to have impact and sustainability. It would need to show us what we should do, where in the system we should do it and what we would need to provide so that it would have maximum systemic impact.

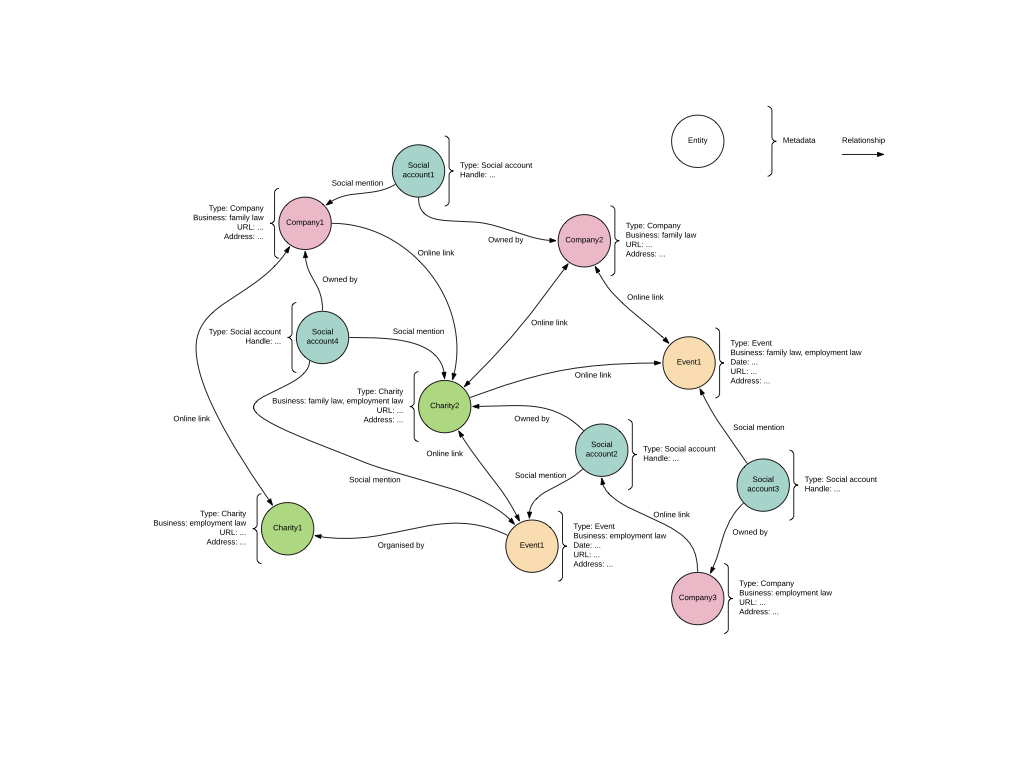

In the simplest terms, the word “ontology” describes a diagrammatic representation of a particular domain (in this case, the affordable justice system) which aims to identify and categorise all of the entities which exist within it as well as to map the various relationships between them. A Class is the most general level of categorisation and is defined by a list of attributes covering the basic activities carried out by that type of entity, its organisational structure, available resources and other relevant criteria. We decided that each attribute must be measurable, either as a quantifiable value or from a pre-determined list of options. The ontology needed to be defensible on the basis of evidence, but also it would need to be computable as a potential element of a future technological intervention.

In essence we quickly found ourselves with a problem that is very common in the ecosystem we were studying; we needed to know who was doing what and where. Fundamentally this is a recurring problem that almost defines any attempt to understand what is going on or should be happening within the sector. That mapping is ubiquitous problem within the justice sector is both a symptom of the legal advice system’s problems and is itself to some small degree a cause of problems – given the fundamentally difficult nature of mapping in the context of the justice sector. Etic Lab was very fortunate in that we had the skills and other resources required to address this problem and a mission to do it in a way that could be sustainable. Given how much time and money has been and is being spent on mapping exercises that are never comprehensive, prone to rapid decay given the high rate of change in the ecosystem and necessarily limited in terms of the data that is available and can be effectively retrieved.

Although we developed the initial software system simply to help find the data we needed to understanding the legal advice sector, it became apparent as we worked and enhanced the core system that we were in fact building a tool that could be valuable to the sector in and of itself.

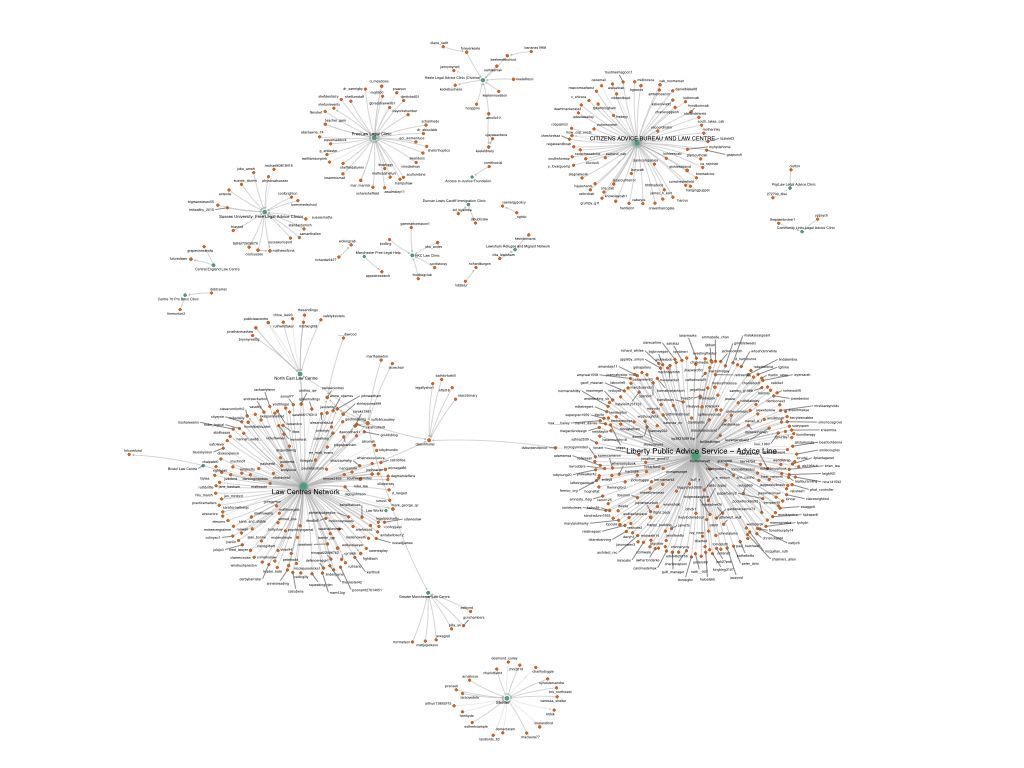

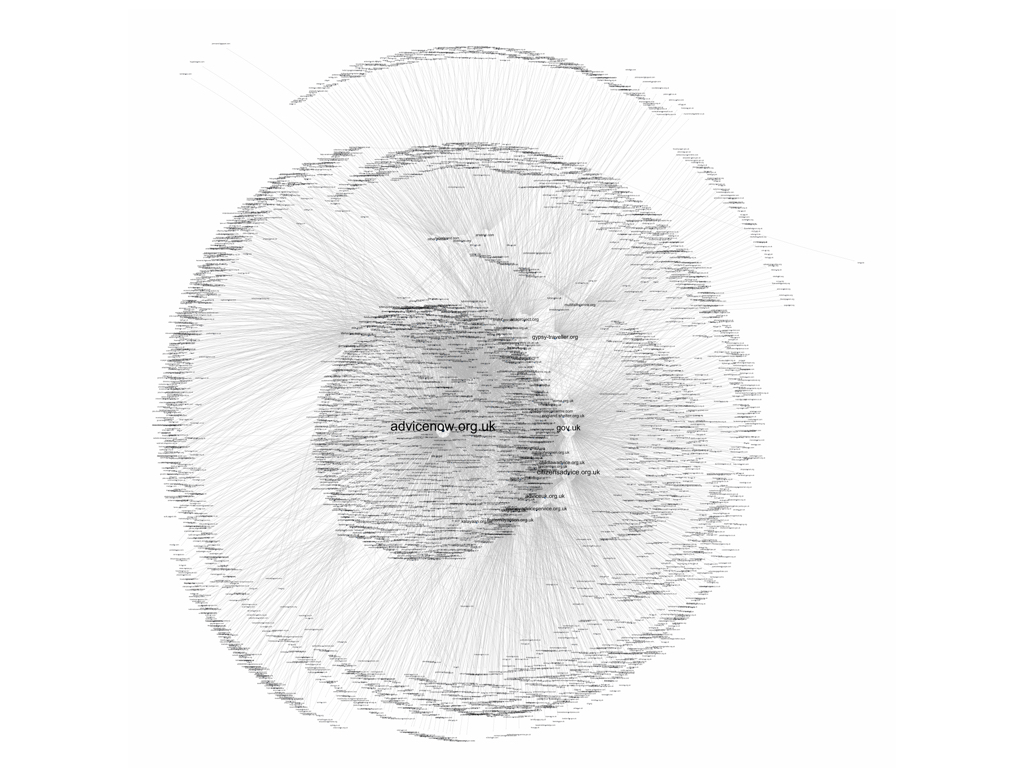

We began by building two different tools, one a very sophisticated general purpose tool for acquiring, storing and indexing web sites with all of their attendant links. This tool as used to create a data set of candidate actors or rather their websites and by successive waves of exploration we were able to find thousands and then tens of thousands of web sites associated by live links with our core sample of access to justice sector digital properties. We then used graph theory and data visualisation tools to allow us to examine what we had found. At this stage we were able to see how some actors were central within the digital ecosystem and other less so – as the theory predicts. Because we were able to access a large range of data about a huge number of actors it was all too apparent from the networks that what agencies were actually offering and doing varied hugely. In fact our problem then became a task of classifying these actors, in particular determining wether they were central to the access to justice ecosystem or not. This is a problem some workers have tried to solve in order that the quality of those actors included in mapping exercises can be measured in some way and used to include and exclude some actors and agencies.

Because we could not find an accessible, objective and replicable way of performing this quality management or approval task we elected to collect more data so that we could allow the actors to speak for themselves as it were by the ways in which they speak and act- digitally.

ELNAT

What we have done is to create a Machine Learning tool and and a large number of digital data points that allows our system to categorise actors in the justice ecosystem in a (relatively) transparent and reproducible manner. At the same time that rich, not to say unique set of attributes associated with each actor we can identify in the ecosystem can be queried in many different ways. Essentially providing us with platform from which to create a variety of tools and services that can be deployed to understand, manage and indeed provide access to the sector.

We have logged the extent degree, direction and level of social media productivity for all of the access-to-justice actors who use Social Media. We have designed and built a tool that measures the degree or level of digital sophistication of the on-line presence of all of the actors. We have incorporated the facility to fold into the digital footprint of the actors identified by the system the data contained in the publicly available data from the Charity Commissioner and Companies House datasets. We are developing tools to represent the expressed goals and concerns of each actor using their own words. Because we are creating a lot of new data including meta data, this system can provide information that didn’t exist in combinations that have never before been thought of. For example we have an insight into the rate at which actors (size, location, target activity etc) in the sector arrive and depart, the relationship between social media sophistication and size, type or age of an organisation and so on. Perhaps most importantly we could now provide a tool that allows a user immediate access to all of the actors available in the ecosystem using a host of criteria from a database that is always up-to-date.

It was not the goal of our project to build production grade commercial products nor indeed is the tool we have built – ELNAT (Etic Lab Network Analysis Tool – we never claimed to be imaginative) the main output of our project. As you can see from the last slides in the deck, we have other ideas on that front. Nevertheless, we have now had feedback from a number of charities and services who have asked to use the system and some to address problems we had never imagined. Etic is now trying to think of ways to bring a production version of ELNAT into existence as a tool for the justice sector as a whole. Not least because we have already begun work on extensions to the core system that will bring some remarkable functionality.